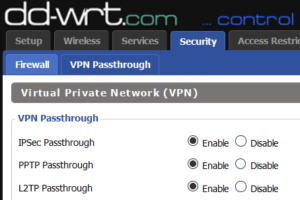

For a long time, I had enabled VPN passthrough with DD-WRT from an IPSEC endpoint running inside the network with the SPI firewall disabled. The VPN was mostly reliable, but every once in a while I would need to reset the tunnel on both sides. I did this until I discovered the magic of enabling the SPI firewall. Just as with a Cisco router, the DD-WRT router needs to inspect the packets to know what to do with IPSEC. So, if you want IPSEC passthrough support, you really should enable both the option in step 1 AND the option in step 2 (below).

Tuning Ext4 for MythTV

When using an ext4 filesystem for MythTV, here are a few tricks to help improve speed.

First of all, always create a separate partition for your recordings! Here’s how to ensure that it is optimal.

Let’s say that you create a partition on device /dev/sdb.

- mkfs.ext4 -T largefile /dev/sdb1

- tune2fs /dev/sdb1 -o journal_data_writeback

- echo “/sbin/blockdev –setra 4096 /dev/sdb” >> /etc/rc.local

In the first step, we tell ext4 to format tuned to use large files. This reduces the number of inodes, maximizing the available space and (unconfirmed, but hypothesized) minimizing the journal size.

In the second step, we enable writeback mode for the filesystem as a default mount option. This is normally not a safe step, but we are only protecting TV content that is already very robust at working in the face of errors. Setting this option allows the OS to write to the disks without 100% lock-step synchronicity.

Now add these options to /etc/fstab.

- nodiratime,noatime

This option in the fstab tells the OS not to change the last accessed times on the files and folders every time a file is accessed. This reduces unnecessary writes, which improves overall performance.

Your fstab might look something like this:

UUID=2a59a579-9779-455c-bfed-d7e69c5ec533 /shared/recordings ext4 defaults,nodiratime,noatime 0 0

That’s it! Happy watching.

Xenserver Heterogeneous CPUs in Pool

I have been working to get some Xenservers setup in the same pool that have different CPU versions. With different features, I get the error message that the CPUs are not homogeneous. To help rectify this, I created the following script and then followed the instructions below.

/root/get-cpu-features

#!/usr/bin/perl

$FEATURES2=$ARGV[0];

if (exists $ARGV[1]) {

$FEATURES1=$ARGV[1];

} else {

chomp($FEATURES1=`xe host-cpu-info | grep " features:" | cut -d':' -f2 | cut -d' ' -f2`);

}

if (!exists $ARGV[0]) {

print "\nUsage: ./get-cpu-features [features-key1] [features-key2]\n\n";

print "Local CPU Features: $FEATURES1\n";

exit;

}

sub dec2bin {

my $str = unpack("B32", pack("N", shift));

$str =~ s/^0+(?=\d)//; # otherwise you'll get leading zeros

return $str;

}

sub bin2dec {

return unpack("N", pack("B32", substr("0" x 32 . shift, -32)));

}

print "Local : ".$FEATURES1."\n";

print "Remote: ".$FEATURES2."\n";

$count=1;

@GROUP1ARRAY=();

@GROUP2ARRAY=();

foreach $GROUP1 (split(/\-/,$FEATURES1)) {

my $GROUP1BIN=dec2bin(hex($GROUP1))."\n";

my $GROUP1BINPADDED = sprintf("%033s", $GROUP1BIN);

$GROUP1ARRAY[$count]=$GROUP1BINPADDED;

$count++;

}

$count=1;

foreach $GROUP2 (split(/\-/,$FEATURES2)) {

my $GROUP2BIN=dec2bin(hex($GROUP2))."\n";

my $GROUP2BINPADDED = sprintf("%033s", $GROUP2BIN);

$GROUP2ARRAY[$count]=$GROUP2BINPADDED;

$count++;

}

@output=();

print "\nMerged: ";

for ($i=1; $i<=4; $i++) {

$newgroup="";

my @bin1=split(//,$GROUP1ARRAY[$i],33);

my @bin2=split(//,$GROUP2ARRAY[$i],33);

for ($j=0; $j<32; $j++) {

$result=$bin1[$j]*$bin2[$j];

$newgroup=$newgroup.$result;

}

$result=printf('%08x',bin2dec($newgroup));

if ($i < 4) {

print "-";

}

}

print "\n";

On server 1

/root/get-cpu-features

The output will look something like this:

Usage: ./get-cpu-features [features-key1] [features-key2]

Local CPU Features: 0000e3bd-bfebfbff-00000001-20100800

On server 2, run the script again, but this time specify the key from server 1 as an argument to the script.

/root/get-cpu-features 0000e3bd-bfebfbff-00000001-20100800

Now the output will look like this:

Local : 0098e3fd-bfebfbff-00000001-28100800

Remote: 0000e3bd-bfebfbff-00000001-20100800

Merged: 0000e3bd-bfebfbff-00000001-20100800

The compatible feature key for all nodes is the one labeled merged, in this case, 0000e3bd-bfebfbff-00000001-20100800.

On each of the two nodes, we now need to set the feature key. We can do this by running this command on each node:

xe host-set-cpu-features features=0000e3bd-bfebfbff-00000001-20100800

Now reboot the nodes and then join them to the same pool.

Note: If you have more than two nodes, then you will need to add a second argument when running the script on nodes 3+. That second argument should always be the "Merged" key from each previous server that the script was run against. This will ensure that all nodes are accounted for in the final merged key. On the last node, the merged key that is produced will be the one that you need to use for every node.

A Poor Man’s Solution for Automatic ISP Failover

This file should be installed on your router. In my case, I was testing with a VyOS router so I was able to easily extend it with this script. Just paste this into a new file and chmod +x to make it executable. Update the IP information below – most importantly, your LAN information. Then add it to a cron job and have it run once per minute. That’s it!

#!/bin/bash

PATH=$PATH:/bin:/usr/bin:/sbin:/usr/sbin

# LAN IPs of ISP Routers to Use as the Default Gateway

PREFERRED="10.1.10.1"

ALTERNATE="10.1.10.3"

# Public Internet Hosts to Ping

PUBLICHOST1="8.8.8.8"

PUBLICHOST2="8.8.4.4"

# Ping the first public Internet device first. See if it fails.

RETURNED=`/bin/ping -c 5 $PUBLICHOST1 | grep 'transmitted' | cut -d',' -f2 | sed -e 's/^ *//' | cut -d' ' -f1`

# If the first pings fail, check the secondary Internet device.

if [ $RETURNED -eq 0 ]; then # Do a second check just to be sure, and to a different IP. Less pings, since we are already pretty sure of an issue

echo "Failed ping test to $PUBLICHOST1. Trying against $PUBLICHOST2."

RETURNED=`/bin/ping -c 2 $PUBLICHOST2 | grep 'transmitted' | cut -d',' -f2 | sed -e 's/^ *//' | cut -d' ' -f1`

fi

# If it still fails, assume that the Internet is down.

if [ $RETURNED -eq 0 ]; then

echo "Everything is not ok. Looks like the Internet connection is down. Better switch ISPs."

date +%s > /tmp/cutover-start.log

else

echo "Everything is ok. Checking if we are using the preferred connection."

CURRENT=`route -n | grep "^0.0.0.0" | cut -d' ' -f2- | sed -e 's/^ *//' | cut -d' ' -f1`

if [ -f /tmp/cutover-start.log ]; then

LASTSWITCHTIME=`cat /tmp/cutover-start.log | sed -e 's/\n//'`

fi

NOW=`date +%s`

TIMEDIFF=$((NOW - LASTSWITCHTIME))

if [ "$CURRENT" == "$ALTERNATE" ] && [ $TIMEDIFF -gt 300 ]; then

date +%s > /tmp/cutover-start.log

echo "Testing if the primary gateway is back online."

route add -host $PUBLICHOST2 gw $PREFERRED

RETURNED=`/bin/ping -c 5 $PUBLICHOST1 | grep 'transmitted' | cut -d',' -f2 | sed -e 's/^ *//' | cut -d' ' -f1`

if [ $RETURNED -eq 5 ]; then

echo "Switching back now that primary ISP is back online."

route del -net 0.0.0.0/0

route add default gw $PREFERRED

else

echo "The primary host is not back online yet."

fi

route del -host $PUBLICHOST2

fi

exit

fi

# If the script does not exit before it reaches this point, then assume the worst. Time to cutover to the other ISP

CURRENT=`route -n | grep "^0.0.0.0" | cut -d' ' -f2- | sed -e 's/^ *//' | cut -d' ' -f1`

# Delete the existing default gateway

route del -net 0.0.0.0/0

if [ "$CURRENT" == "$PREFERRED" ]; then

echo "Switcing to the alternate ISP $ALTERNATE"

route add default gw $ALTERNATE

else

echo "Switching to the default ISP $PREFERRED"

route add default gw $PREFERRED

fi

Why the public cloud is the fast food of the IT industry.

The challenge for any IT manager is how to meet business requirements with technology. More often than not, those requirements dictate rapid deployment. The cloud excels at this, since the infrastructure is already available and waiting to be used. Rapid deployment, just as fast-food, does not guarantee quality, however. In fact, the performance and reliability of public cloud services is currently variable and largely unpredictable. Despite the high availability of fast food restaurants, people do not eat it for breakfast lunch and dinner. They know that the quality of the food is sub-par, and it is intuitive to recognize that its usefulness runs out if speed and short-term cost are not the two foremost drivers. The same holds true with the public cloud services. They excel at speed-to-deploy and cost-of-entry for a solution. And – just as with fast food, public cloud services are almost irresistibly appealing on the surface. But after considering quality and long-term costs, it is clear that the best solutions remain in private clouds.

Cloudsourcing – What is this “IT revolution” all about?

First there were servers. Each server had a single purpose. In some cases, 90% of the time, they ran idle — a big waste of resources (processor, disk, memory, etc.)

Then there were server farms, which were groups of servers distributing loads for single applications. Distributing loads to multiple servers allowed for higher loads to be serviced, which allowed for tremendous scalability.

Then there was SAN storage, the aggregation of disks to provide performance, redundancy, and large storage volumes. A company could invest in a single large-capacity, highly-available, high-performance storage device that multiple servers could connect to and leverage. No more wasted disk space.

Then there was virtualization, the concept of running more than one server on the same piece of hardware. No more wasted memory. No more wasted processors. No more wasted disk space. But the management of isolated virtual servers became difficult.

Then there was infrastructure management. The idea that we could manage all of our servers from a single interface. No more wasted time connecting to each server to centrally manage them. But there were still inefficiencies when managing the applications and configuration of the virtual servers.

Then there was devops, the concept of having software scripts manage the configuration and deployment processes for virtual or physical servers.

… With all of these inefficiencies addressed, you would think that there is no more room for improvement. But we do have a “gotta-have-it-now” society, and in this age of fast-food and mass produced goods, we had to see this coming. The “Cloud”. The “cloud” is the fast-food of servers. It is the idea that someone else can leverage all of the aforementioned concepts to build and manage an infrastructure much larger than yours, and cheaper and faster (per unit) than you can. It is the idea that economies of scale drive costs so low for the cloud provider that those savings translate into big savings for the companies or people that leverage them. We all use “the cloud” in some way. Microsoft has OneDrive/SkyDrive, Google has Google Drive, and then there’s Dropbox. All of these services represent “the cloud” for individual people. But there are clouds for companies, too, like Amazon’s AWS, Google’s Cloud Platform, Microsoft’s Azure, etc.

So how do we know that the cloud is good for a company? Well, it’s really difficult to tell. For one, the traditional model for companies was one where they owned their infrastructure. All expenses were considered capital expenses – that is, there were not recurring expenses for their infrastructure equipment (except perhaps for software components). But when it comes to cloud infrastructure, the business model is, of course, the one that profits the cloud owner the most — the holy grail of business models — the recurring revenue stream known in IT speak as Infrastructure As A Service. Cloud businesses are booming right now! The big question is – is the “cloud” as good for the customer as it is for the provider?

In my experience, owning hardware has distinct advantages. The most recognizable difference is that a company can purchase infrastructure while sales are up – and they can coast along continuing to leverage their infrastructure equity when sales dip down. In my experience, once hardware is paid for, the recurring cost to run and maintain it is much less than the cost of any cloud solutions. After all, the cloud providers need to pay for their infrastructure, too. They pass that cost on to you after padding it with their soon-to-be profits, so your recurring costs with them will potentially always be higher than yours will be if you own your infrastructure. If you were to transform your initial investment into an operational expense and distribute it out over the lifespan of the equipment (let’s say, 5 years), then add in the cost of ownership of that equipment, then the numbers become close enough that I am comfortable enough to declare that at least the first year of ownership will be a wash. In other words, if you intend to own hardware for a year or less, then the cloud is really where you should be. The longer that you intend to continue to extract use out of your hardware, though, the more appealing it is to own your own rather than use the cloud.

To put this into perspective… it is common for townships to employ a mechanic to maintain their vehicles. If townships were to, instead, lease all of their vehicles, the mechanic would not be necessary and costs could initially be reduced. But, over time, the value that the mechanic introduces would more than pay for his salary. Company’s have mechanics, too, except that they are called systems administrators. They keep systems running and squeeze every last drop of efficiency out of a piece of hardware. If you are a company without “mechanics”, the cloud is for you. But if you do have systems administrators within your employ, then yet another consideration needs to take place. What is the reason for leveraging the cloud? If your answer is, “Because it’s the latest trend.”, then you need to take a step back and reconsider taking another sip of the kool-aid that you have been drinking. If your answer is, “We have been growing rapidly and our infrastructure cannot keep up.”, then perhaps the cloud is the right spot for you. If your answer is, “Our business is seasonal and sometimes we have a lot of wasted infrastructure that is online, but unused.”, then perhaps the cloud is for you.

There are other cases, and the math certainly needs to be done. Economies of scale make this topic interesting because it is certainly plausible that one day cloud services will be cheaper than owning your own infrastructure. There will always be the differences in CapEx vs OpEx, and that requires an assessment of your sales patterns before taking any plunge into the cloud. One thing is certain, though. The cloud is not for everyone. Assess your business needs, assess the competence of your IT group, assess your revenue streams, and then make a careful and calculated decision. But whatever you do, don’t do it just to do it. Because chances are that the results will not meet your business needs.

What constitutes a great leader?

A leader is a driver, meaning that leaders must be good decision makers and must be capable of choosing a destination. When judged in hindsight, the destination must be meaningful and productive. Just like a driver of a car, a great driver will act upon the passenger-spoken words, “We need to take the next exit.” A bad driver will disregard all advice. As drivers, we may know where we want to go, but it is our passengers who have the best view of the road. It is our passengers who are in the best position to navigate. Good drivers take us to our destination. Great drivers rely on great navigators to help them get us to our destination in the best possible way.

Heeding the advice of “passengers” is vitally important to the effectiveness of a great leader.

Let’s consider the fail-forward (trust) and fail-stop (mistrust) approach to governance. The term “fail” refers to the failure of a tactical decision/action to comply with a strategic goal. The term “forward” means that the short-term tactical action may proceed if it means that it will “keep the lights on”. The term “stop” means that in the interest of meeting a strategic goal, a tactical action is forbidden and the company suffers consequences as a result of inaction, “lights out”. Fail-forward requires trust because the time and effort taken to assess an urgent tactical action may jeopardize the effectiveness of that action and may therefore jeopardize the health of the company. Fittingly, fail-stop reflects mistrust because the reflexive blocking of a tactical action for the sake of improved alignment with a strategic goal does not take into account upward feedback and therefore cannot allow for the trust of tactical decision makers.

A great leader fails-forward, meaning that there is a healthy bi-directional trust relationship both up and down the hierarchical chain of responsibility. A great leader empowers tactical decision makers with the ability to navigate. A great leader recognizes that the acceptance and accommodation of non-ideal elements is often crucial to accelerating the journey to our destination (success) – in the same way that a driver might take a detour to avoid a road closure rather than waiting for the road to re-open in the vain of not diverting from his/her predetermined ideal route.

We are all leaders, navigators, and followers at different times. When leading, if our decisions are unchanged when compared with and without our navigators, then perhaps it is time to rethink whether or not we are great leaders, good leaders, or shamefully bad leaders.

The Veterans Affairs Scandal

Everyone is furious about the way that our war veterans have been treated in the past, and present. The long waiting lists for medical procedures and the mistreatment of our veterans has caused quite an outrage – and rightfully so. Now there are stories in the news about heads rolling – officials in charge being dismissed from their posts. The problem that I have with this sort of thing is that I believe that it’s purely a public relations move.

It is unreasonable to believe that the head of the VA in a state or town was making policy decisions for the whole country. More than likely, it was budget cuts that were passed in Washington D.C. that put the VA officials between a rock and a hard place. Of course, it’s entirely possible that these guys had the budget and just decided to mistreat veterans. But how many people would do that? What would their motivation be? It is far more reasonable to believe that they got a bad deck of cards and they were trying to make do with it. Now the people holding the cards are the ones that are losing their jobs, but I think it really should be the people who dealt the cards who need to address the nation. They must have had difficult decisions to make, and hearing their thought process would certainly add some clarity over who is at fault, or at least the rationale behind the decisions.

Abstract Technology Concepts – Devops

When we think about technology, we often think of it as abstract concepts. For example, we think of a “telephone”, not a network of transducers wired through hubs and switches that create temporary channels that bridge calls together. It is natural for us to add layers of abstraction that help us to understand the purpose or use of something. In programming languages, we saw the rise of object oriented programming – the ability to define an object and then manipulate its attributes. The Windows operating system is a layer of abstraction that you interact with. These days, you can accomplish, with scripts (PowerShell), most of what you can do with a mouse. That brings me to the latest layer of abstraction – devops. It’s a concept that says that developers and operations join forces and share methodologies for the betterment of the company that they represent. For example: With a traditional sysadmin mindset, a systems administrator might click a button every day to accomplish a task. But a developer would write a program that automates pressing that button to gain efficiency. So devops is really about applying development concepts to systems administration. Aside from potential gains in efficiency, there are also gains in consistency, since the code will not forget to press the button – and it will press the button each day exactly the same way as the day before. Consistent input means more consistent output. So the task can be considered more reliable.

Sounds great, right? Why didn’t we do this 10 years ago?

Well, the truth is that in the Windows world, the GUI is king. But in the Linux world, the command-line is king. Automation in Linux is much easier to accomplish. Linux systems administrators have been practicing the concept of devops for quite some time now – it just never had a name. Microsoft has just in the past several years released tools, such as PowerShell, that allow Windows Systems Administrators to practice devops. I consider the devops mindset a tool because it is a concept that has a specific function. The function of devops is to automate, automate, automate. Like any other tool, there are problems that it is the solution for, and problems where it just does not fit.

So where does devops not fit? Ok, so devops is all about automation, right? So naturally, anything that we do not want to automate is something that probably is not something that the devops paradigm is a fit for. Here are a few examples of things that should not be automated:

- User account information entry – someone needs to type this information at some point. From there forward, we can automate though.

- Buidout of the automation scripts – this a tedious manual process that can possibly be partly automated, but not fully automated.

- Image building – I am talking about base images. It is not that this cannot be automated. It is that in most cases, building a single image manually is more efficient than building a set of scripts to build it.

Devops is a great way to look at things, and it’s about time that the Linux mentality has spread to Windows. But as you might already understand by now, the effort required to automate is considerable. Devops is an abstract concept, just like the telephone is. Underneath the devops concept are additional processes and procedures that, without devops, would not be required. If you think of devops as a layer of abstraction, then you will quickly recognize that just like virtualization, or object oriented programming, there is a penalty when applying it. In most cases, the productivity/reliability gains of applying devops concepts far outweigh the penalty of implementing it, though.

Conclusion

Devops is best applied when the amount of manual work to be done is significantly greater than the amount of work required to automated it.

When considering whether or not to apply devops practices to an IT function, consider if the functional requirements vary too much to be automated, or if the function is used too infrequently to warrant the additional overhead required by the application of devops practices (automation). Remember the goals. Improve efficiency, improve consistency, improve reliability. Prioritize those goals, and then determine if the act of implementing devops is expected to hinder any of them. If the answer is no, devops does not hinder any of these goals, then go ahead and apply it. But if devops challenges your most important – top priority – goals, then perhaps reconsider its application.

Improve Zenoss Performance – Infrastructure Load Time

Q: Does the infrastructure page take a really long time to load for you?

A: Take a look at your open event log entries. Every open event gets indexed when the page loads. If you want the page to load faster, simply close as many events as is prudent for your historical needs.

If that fails to help, follow this guide to tune the caching and multi-threading options:

http://wiki.zenoss.org/Zenoss_tuning